AI@Home, Part 2: Open WebUI

In the first part we looked at Ollama. Now let’s dig a bit deeper into Open WebUI which is the piece that glues everything together.

Open WebUI is an open-source, self-hosted interface designed to facilitate interaction with various Large Language Models (LLMs). It provides users the ability to run LLMs locally on their own servers or cloud instances without depending on third-party services. Here are some key aspects of Open WebUI:

- Self-Hosted and Offline : Users can host it independently, ensuring data privacy and offline operation.

- Extensible and Customizable : It supports extensions and plugins for additional functionalities, allowing users to tailor the platform to their specific needs.

- Support for Multiple LLMs : Open WebUI is compatible with a range of LLM runners like Ollama and can be integrated with APIs from providers such as OpenAI.

- Admin Control : The first user typically has admin privileges, enabling them to manage other users, settings, and model instances.

- Docker-Based Deployment : Installation and management are facilitated through Docker, ensuring isolated environments for running the interface.

- User Interface : It features an intuitive chat-based UI that makes it easy to interact with language models via text input fields and response displays.

- Pipeline Plugin Framework : Allows integration of custom logic and Python libraries for functionalities like function calling, rate limiting, translation, and toxic message filtering.

- Community-Driven Development : As a community-driven project, Open WebUI evolves continuously through contributions from developers worldwide, incorporating feedback to enhance its features.

Overall, Open WebUI empowers users by providing control over their interactions with LLMs while offering flexibility and customization options.

Requirements

In terms of requirements not much is needed. A decent machine should be able to run Open WebUI. In my installation I have it run on the same host as all the other AI@Home Components.

Installation

As described here there are a couple of ways to run the UI. With Docker and a (dockerized) Ollama on the same host:

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:mainOr with Ollama running on a separate machine:

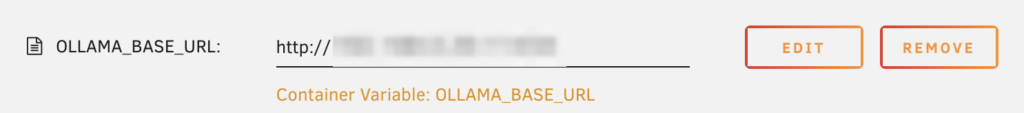

docker run -d -p 3000:8080 -e OLLAMA_BASE_URL=https://example.com -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:mainUsing Unraid, it’s again a breeze as it is available in the community applications:

When editing the the container setting, there is a entry to specifiy the Ollama address

That’s it for the installation.

Usage

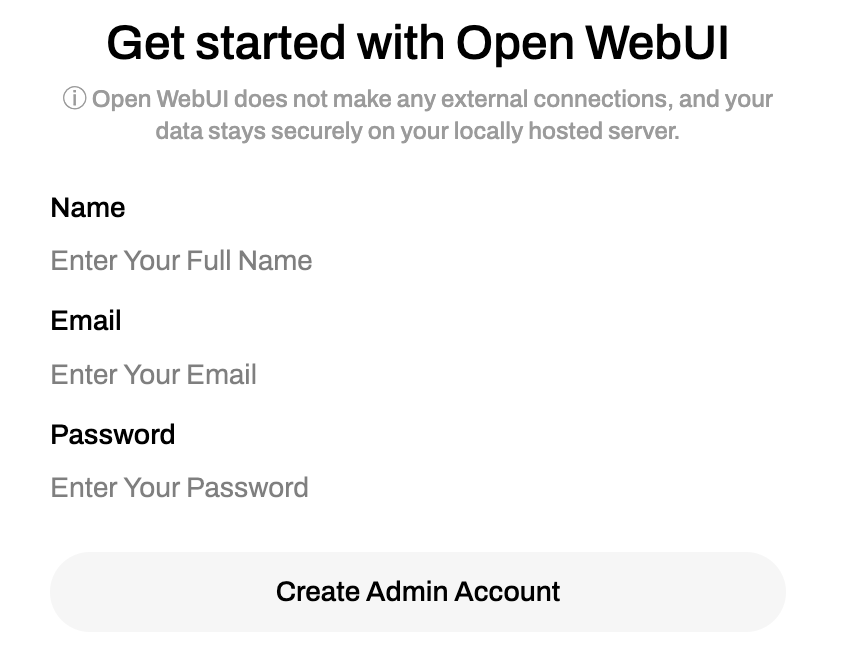

Now the fun part begins. Let’s start by downloading a model and have a simple chat. Before doing so, an initial setup is required

Given you are doing this in your private network, you can be relaxed with user and password. If you want to open to the public internet, I would highly recommend integrating with a single sign solution from any of the know identity management solution providers. For my setup I chose Google OAuth, which I will cover later.

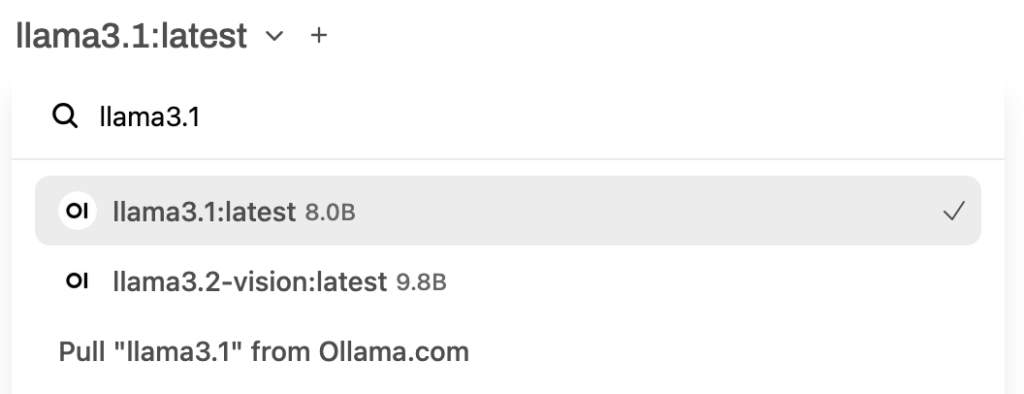

Once the admin account has been created you will see a nice user interface. In the top left corner a model can be selected or downloaded from ollama.com. This is the first thing to do. Depending on the used hardware, Lllama 3.1 is a good start. It can be downloaded by simply enterering llama3.1 and then select “Pull Llama3.1 from Ollama.com”.

When downloaded the LLM is ready to chat. Now it’s possible to experiment with the available models. Keep in mind to start with smaller models. In theory a lot is possible, practically it heavily depends on the available GPU. In my case with a 12GB RTX 3080 models up to 14B parameters work quite well. If you are in the comfortable situation to anything with 24GB (RTX 3090, 4090 …) then also 32B models should run well.

Retrieval Augmented Generation

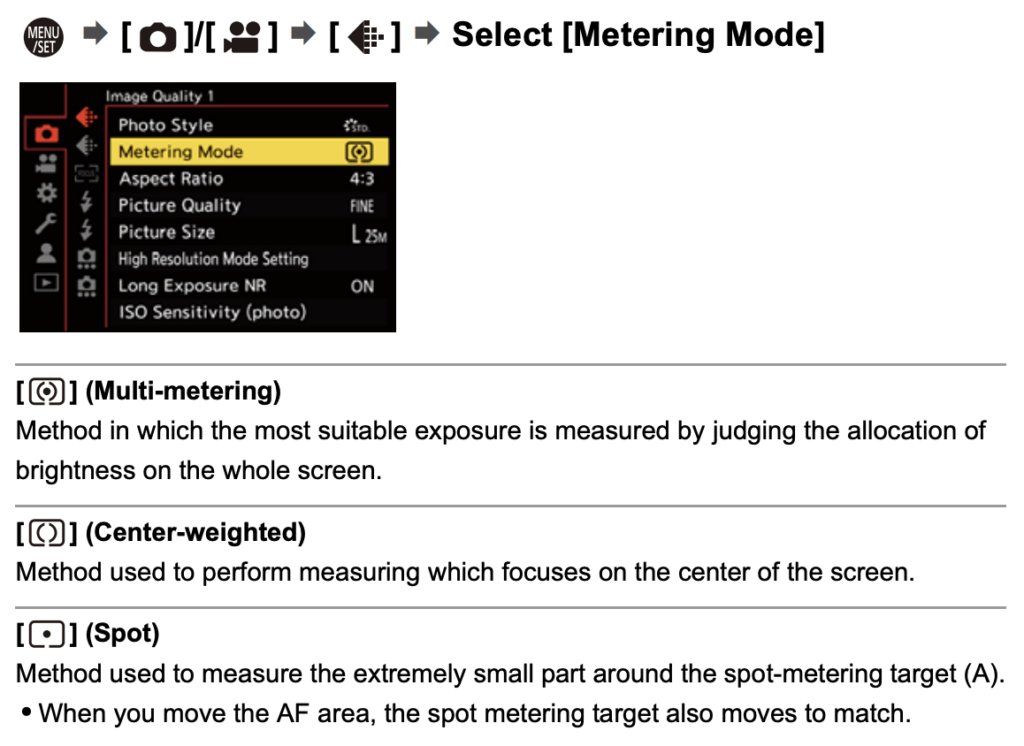

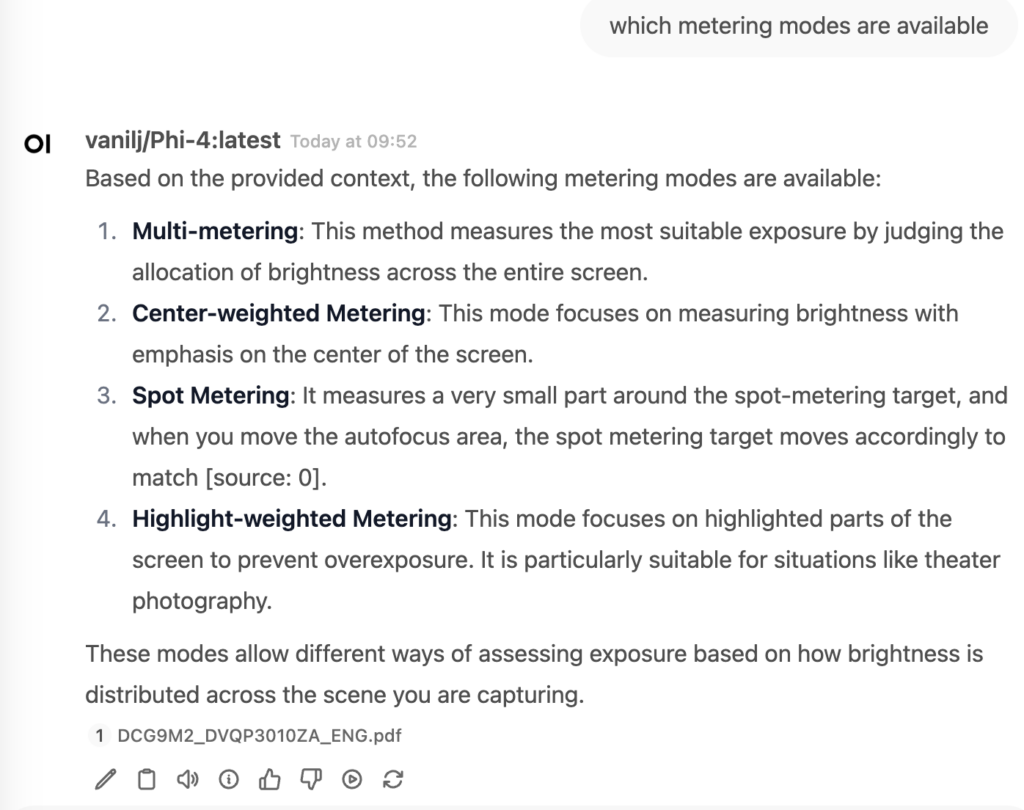

Retrieval Augmented Generation (RAG): Moving beyond casual conversations, it’s now feasible to introduce a specific context and engage in a discussion around it. PDF documents often serve as excellent sources for this purpose. Simply drag and drop a PDF document onto the UI, and you’re all set to ask questions about its content. For instance, I uploaded the latest PDF manual for my Panasonic G9M2 camera into the UI. Without any adjustments, it was ready to answer basic questions about the manual. See below, on the left side is a snipped from the camera manual, on the right the model correctly answered the question about the metering modes:

This is called Retrieval Augmented Generation (RAG) – a fascinating concept! This technique is employed by innovative products like the impressive perplexity.ai search engine. Here, the context is a web search. A query is initiated, and the results are utilized by the language model to generate a response. While I have no doubt that perplexity.ai employs a myriad of advanced techniques and a highly proficient language model, we can emulate this approach by integrating Open WebUI with a search engine, such as SearXNG. We’ll dive into this in more detail later.

Image Recognition

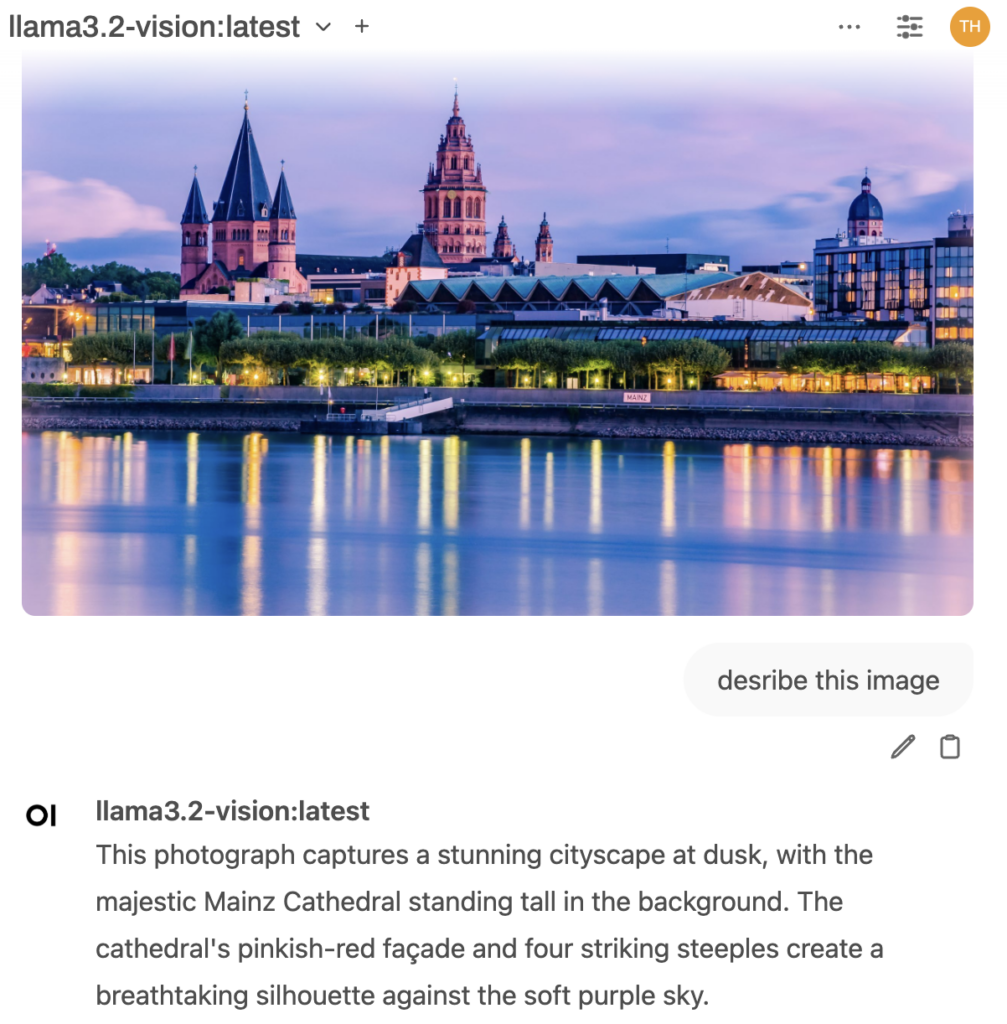

However, understanding a given textual context is not the end. Certain models, often referred to as multi-modal, are capable of working with non-textual contexts, such as images. This is also possible in this setup, but there are only a handful of these vision models available for Ollama. My personal experience is limited to llama3.2-vision in the 11B size, which already performs impressively well. It can be downloaded in the Open WebUI by simply searching for it in the model selection box (located in the top left corner). For example, just dragging a stock photo of my lovely hometown Mainz on to the UI and asking the model to describe the given image:

It identifies this correctly as the cathedral of Mainz. This is just freakin’ awesome! Remember, this operates on slightly outdated PC hardware (4 years old). By being a bit more specific with the prompt, it would be an easy task to generate captions, which will soon be required on many sites according to the EU Accessibility Act. Tagging, of course, is another straightforward task. Not only is it simple to do this in UI, but also, Open WebUI and Ollama offer an OpenAI compatible API. This makes it easy to bulk caption and tag assets. Another topic I will discuss in a coming post.

Development support

The final topic I want to address is the ease of obtaining basic support for developers. While it may not compete with major entities like OpenAI or Claude from Anthropic, this modest, home-based solution can still be beneficial for beginners or intermediate learners. It can aid in learning and improving development skills in a specific programming language (e.g., Python, Java, Typescript) – all without any cost and with no data privacy concerns, which naturally applies to all use cases.

A good starting point for this is the qwen2.5-coder model, which I use in the 14B parameter version. Again, it can be easily download from the UI. When selected, just make a test and for example enter the following prompt:

create a html page using a canvas and javascript to simulate the three body problem. Use different masses between 50 and 100 for the bodies and illustrate the masses by the diameter of the body. Create a reset button to reset position, velocity and mass randomly

and enjoy the show:

In this post, we have explored the basics of Ollama and Open WebUI, including how to use and download different models, how to utilize retrieval augmented generation, image recognition, and large language models to assist in development tasks. In the next post, I will cover how to install a meta search engine and connect Open WebUI to enable RAG on web searches.